HoloSound

Project Description

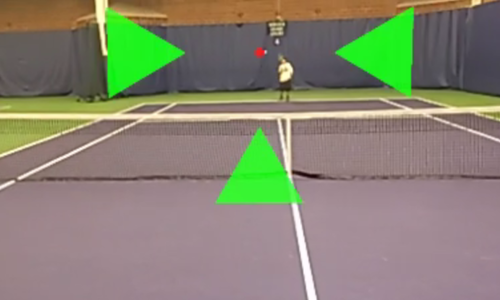

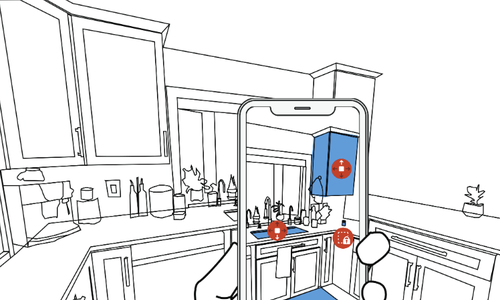

Head-mounted displays can provide private and glanceable speech and sound feedback to deaf and hard of hearing people, yet prior systems have largely focused on speech transcription. We introduce HoloSound, a HoloLens-based augmented reality (AR) prototype that uses deep learning to classify and visualize sound identity and location in addition to providing speech transcription.

Publications

Talks

Sound Sensing and Feedback Techniques for d/Deaf and Hard of Hearing Users

Mar 12, 2021 | Carnegie Mellon University, HCII Lecture

Virtual

Sound Sensing and Feedback Techniques for d/Deaf and Hard of Hearing Users

Feb 18, 2021 | UW Speech and Hearing Center

Virtual

Sound Sensing and Feedback Techniques for d/Deaf and Hard of Hearing Users

Dec 11, 2020 | UW General exam

Virtual