SonoCraftAR

Project Description

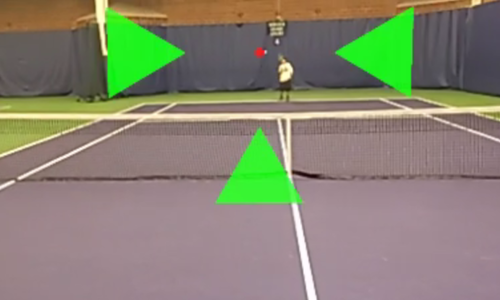

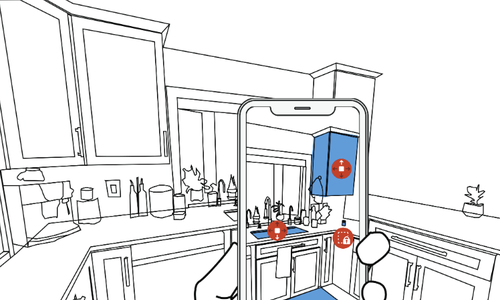

Augmented reality (AR) has shown promise for supporting Deaf and hard‑of‑hearing (DHH) individuals by captioning speech and visualizing environmental sounds, yet existing systems do not allow users to create personalized sound visualizations. We present SonoCraftAR, a proof‑of‑concept prototype that empowers DHH users to author custom sound‑reactive AR interfaces using typed natural language input. SonoCraftAR integrates real‑time audio signal processing with a multi‑agent LLM pipeline that procedurally generates animated 2D interfaces via a vector graphics library. The system extracts the dominant frequency of incoming audio and maps it to visual properties such as size and color, making the visualizations respond dynamically to sound. This early exploration demonstrates the feasibility of open‑ended sound‑reactive AR interface authoring and discusses future opportunities for personalized, AI‑assisted tools to improve sound accessibility.

Publications

Talks

SonoCraftAR: Towards Supporting Personalized Authoring of Sound-Reactive AR Interfaces by Deaf and Hard of Hearing Users

Oct 08, 2025 | IDEATExR

Daejeon, South Korea

Jaewook Lee, Davin Win Kyi, Leejun Kim, Jenny Peng, Gagyeom Lim, Jeremy Zhengqi Huang, Dhruv Jain, Jon E. Froehlich