Project Description

Publications

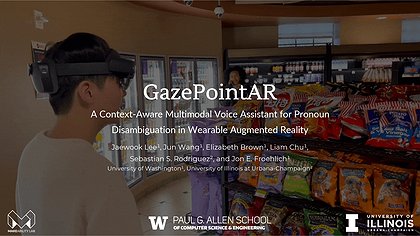

GazePointAR: A Context-Aware Multimodal Voice Assistant for Pronoun Disambiguation in Wearable Augmented Reality

CHI 2024 | Acceptance Rate: 26.3% (1060 / 4028)

PDF | doi | Citation | GazePointAR

Towards Designing a Context-Aware Multimodal Voice Assistant for Pronoun Disambiguation: A Demonstration of GazePointAR

Extended Abstract Proceedings of UIST 2023

PDF | doi | Citation | GazePointAR

Talks

May 15, 2024 | CHI

Honolulu, Hawaiʻi

PDF | PPTX | GazePointAR